The first thing that you get to experience with AI is just text-based. It kind of reminds me of the early days of computers, working with DOS (wow-I’m old).

Those in academia know that we’re in an arms race. Students want to use AI to get good grades. I guess we’ve completely abandoned the idea of learning.

Teachers/Professors are trying to make sure that students are actually doing their own writing, and not just coming up with good prompts for AI generators.

So, I thought that it was a fun little test to see which AI’s were the best at making an admittedly dry topic come to life, and at the same time, fool a commonly-used and respected AI checker.

I will be testing 4 different AI tools for this one.

- ChatGPT

- Perplexity

- Grok

- Rytyr (If you don’t know that one, it’s specifically themed at creating text)

- Gemini

If you want to read the specifics of the experiment, I put them below.

The Experiment

Summary: I will have AI’s write a short article about business efficiency, something that is a dull topic generally. I will test if it actually makes sense, if it is insightful rather than dull, and finally how it does checked by the leading AI text checker.

Test: I will ask the AI “write a 3 paragraph essay on business efficiency that would fool an AI checker.”

I will show what the AI created here.

Then, I will test what is generated using Scribbr.com. In that sense, it is both a test of the AI’s ability to generate and the AI AI deterctor.

I will give each on a score on Task Accuracy, Insightfulness, and AI Detectability. Finally, I will give each one a composite Final Score.

In a final test, I will take the best AI generated text, and I will see if a slight edit will fool the AI checker, and then I complete rewrite, using the AI text as a sort of outline.

Here’s the TL;DR – if you want to read it, click to expand. If not, then come back to it later.

The clear winner was Gemini, but the AI checker could still catch it every time.

Editing a bit was effective, but the AI checker still thought at least 25% was automated, but it was not really worth the effort anyway.

The best option was to use it as inspiration for a rewrite. The AI checker said that was 0% automated, even though a few sentences were kept unchanged. Doing your own writing is more effective and more the point anyway.

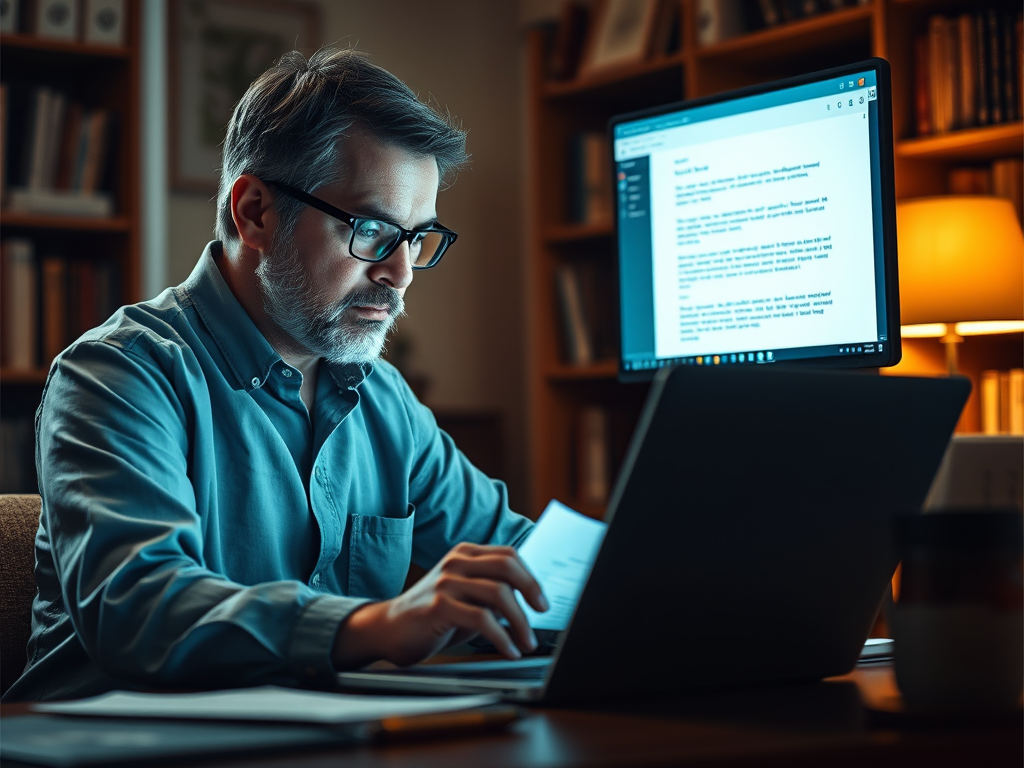

ChatGPT

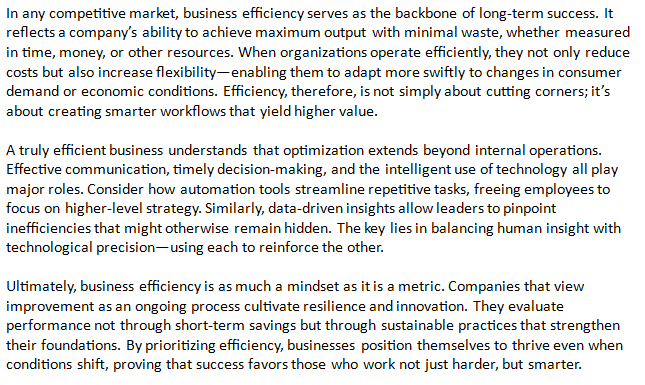

Here’s the text that it wrote.

Task Accuracy – Every AI seemed to do pretty well at this, and this is the least interesting of all the categories. It is pretty disasterous, if it is off, though. This is generally good here. A

Insightfulness – I’m the wrong person to ask about this, after having read a lot of these. I guess, I would rate these as more insightful if/when they say something that makes me go “hmm.” This didn’t, but it wasn’t bad either. B

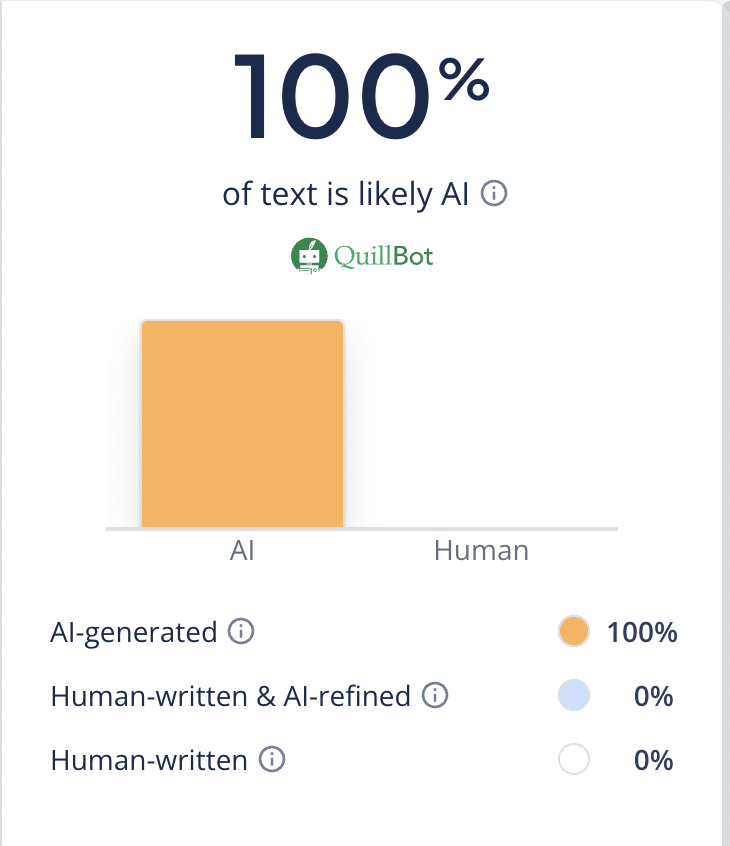

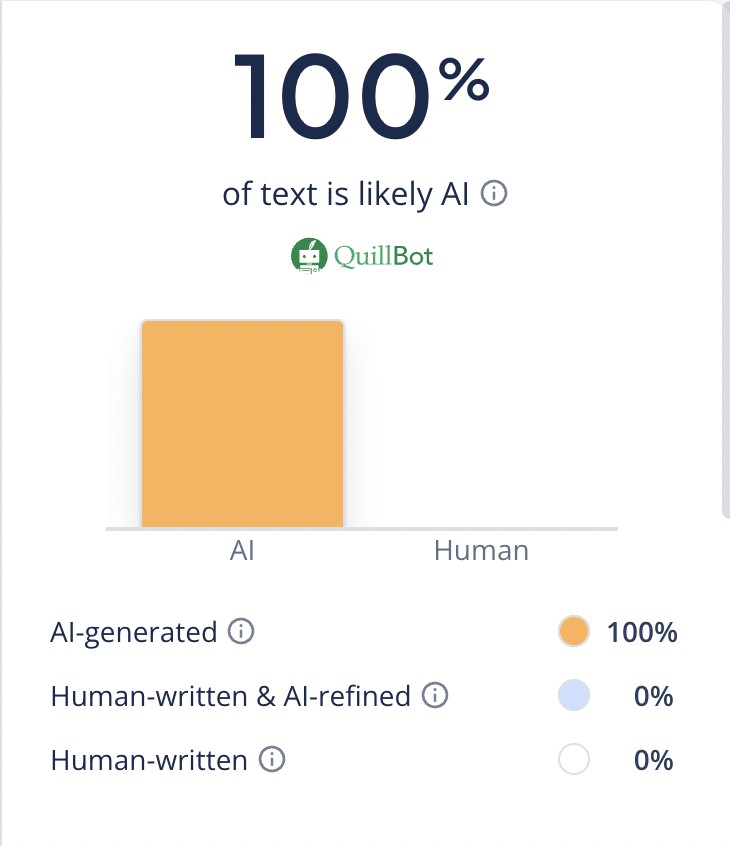

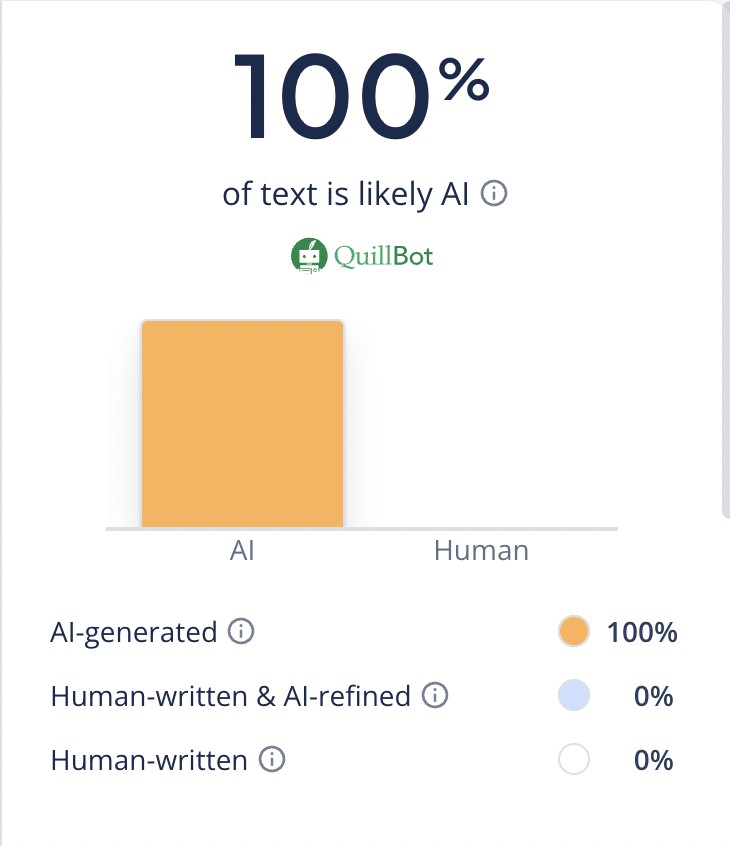

Detectability – Scribbr had no problem with this one at all. I could almost hear it laughing as it returned the following review.

Detectability score: F

Final score: C-

Perplexity

Here’s the text that it came up with.

Task Accuracy – It wrote about the topic, alright. But it seemed to be more general in a way, than the others. Here task accuracy seems a bit light. B-

Insightfulness – Wow. I think that I started to fall asleep while reading this. Let me get some caffeine to finish this…OK, back. I did enjoy “efficiency is much a mindset as it is a metric,” which is a lot of words for saying essentially nothing at all. D

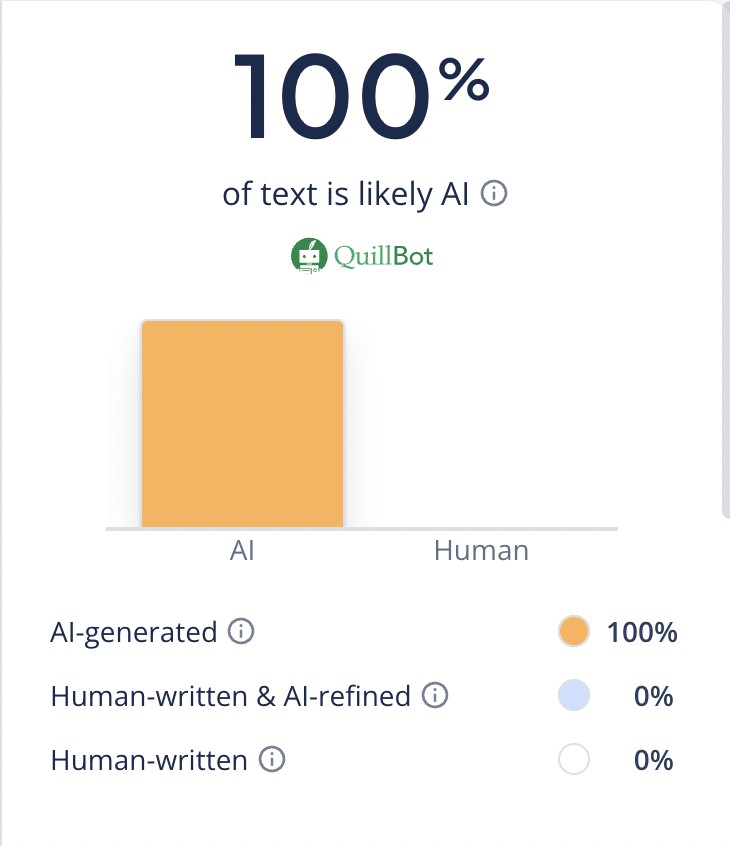

Detectability – This was not different at all than the previous.

I can’t give it a different score than ChatGPT. The result was identical. F

Final Score: D

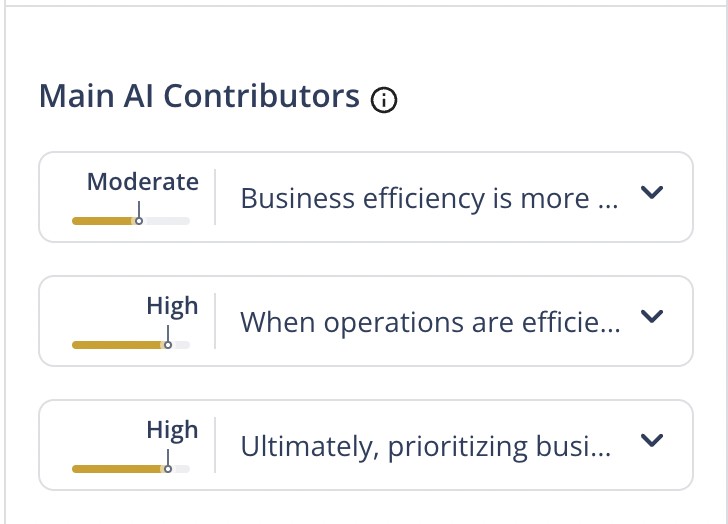

Grok

Grok did a more interesting job, although it ultimately had the same result with the AI checker.

Task Accuracy – The Grok text was actually the most interesting, maybe. Ultimately, it stayed on task. A

Insightfulness – I actually liked the way that it included kaizen explained in context, which Gemini didn’t do. Also, it contained two examples of how 2 companies created efficiencies. Ultimately, this was more interesting to read. B

Detectability – This was no different at all than the previous ones.

F

Final score: C-

Rytyr

If I could give bonus points for this being a palidrome, I would. But alas, I cannot. Unfortunately, that is where my appreciation stops. It suffers the same fate as the others, with one ever so slight bit of hope.

First, the text.

Task Accuracy – I do think it generally did stick to the task. However, there was an em-dash. I am pretty sure that em-dashes and semi colons are required by the programmers. Since part of the instruction was to fool fact checkers, and that was blatant…A-

Insightfulness – This lacked insightfulness. C-

Detectability – This suffered the same fate.

but…

One paragraph was almost fooling. The previous checks had only High certainty. For that, this category get slight higher rating. D-

Final score: C-

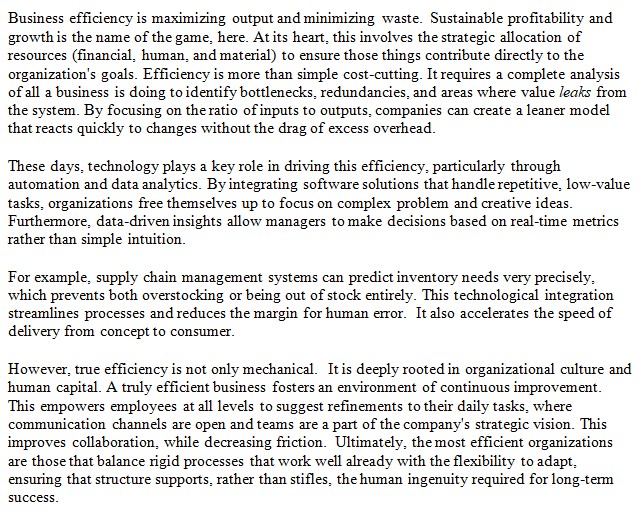

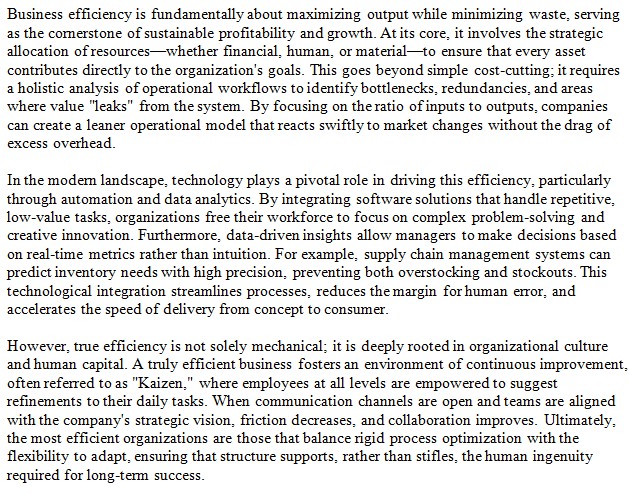

Gemini

Gemini’s text wasn’t interesting either, and presents ultimately the same fate, but it does have the brightest spot yet. I’m not sure why exactly, but it does.

Task Accuracy – This did stay true to the topic, but it did include 2 em-dashes, this time. Em-dashes are like crack to AI’s. I tried to get Gemini to stop using them, but that didn’t work at all. A-

Insightfulness – I didn’t really learn anything. Grok’s inclusion of examples was the best, but this also included Kaizen. B-

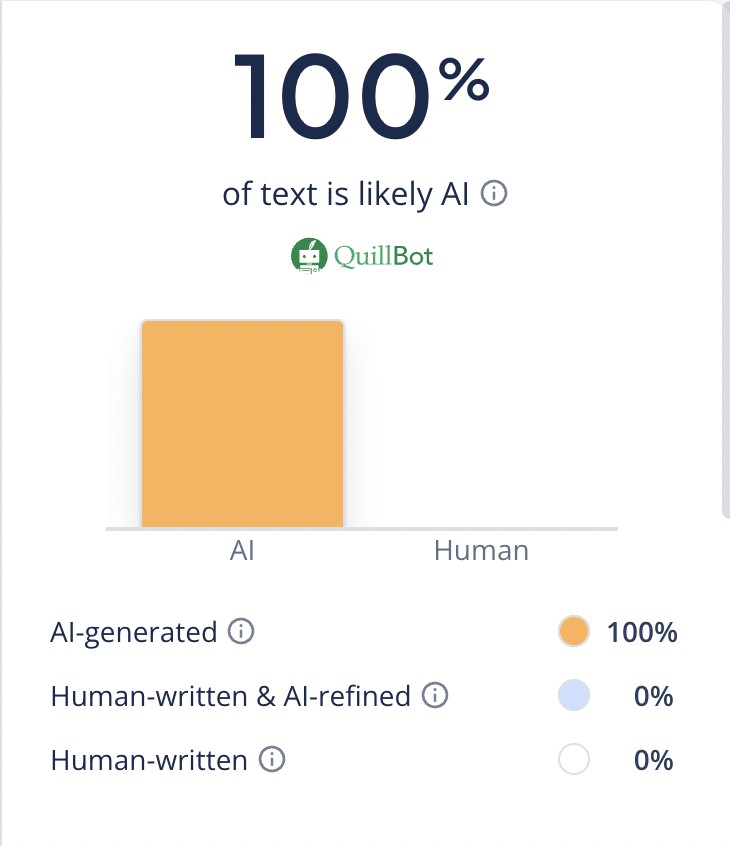

Detectability – just like the others, the AI detector knew it instantly.

But once again, the AI checker was even less certain of itself, this time.

As you can see, the checker was even less certain than all of the others. It still failed the test but comparitively, it was the best of all. D+

Final Score: C+

But there is one more thing to test.

Editing & Rewriting

Gemini had the text that came closest to fooling the AI checker, so what happens if we do just a bit of editing?

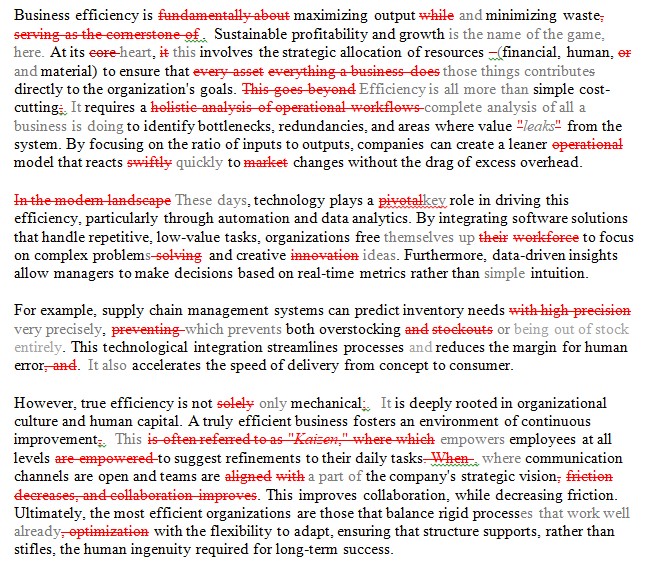

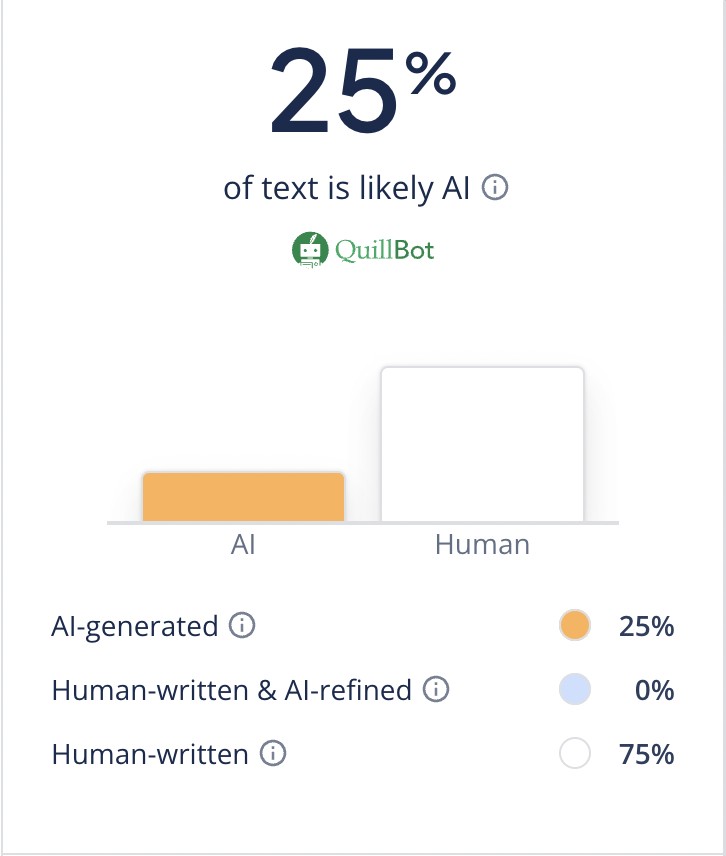

In the first test, I edited and replaced 66 of the 358 words, or 18%. I made sure that there weren’t any em-dashes or semi colons, but kept 82% of the AI generated text. In case you really want to know, the changes I made are outlined below.

But, what did that do? Did the AI checker catch that it was 82% AI

It did not. However, it did detect that AI was involved. More than that, it took longer to make edits than it did to do a rewrite. Ulitmately, I would say that it just wasn’t worth it to do these edits.

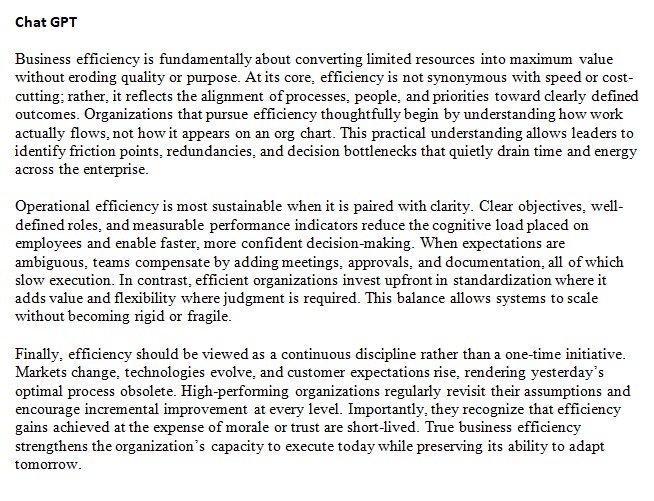

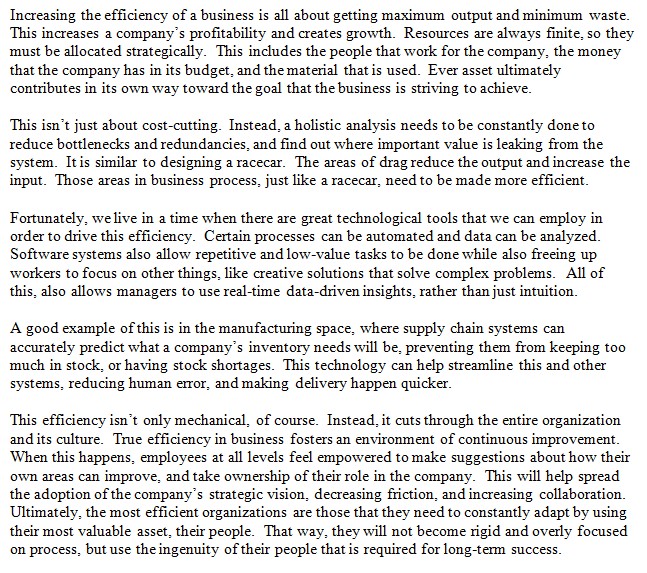

So, what happens if I take the Gemini created text as inspiration, and rewrite the entire thing (leaving a couple of sentences) and follow Gemini’s structure and thesis.

Here’s what I wrote.

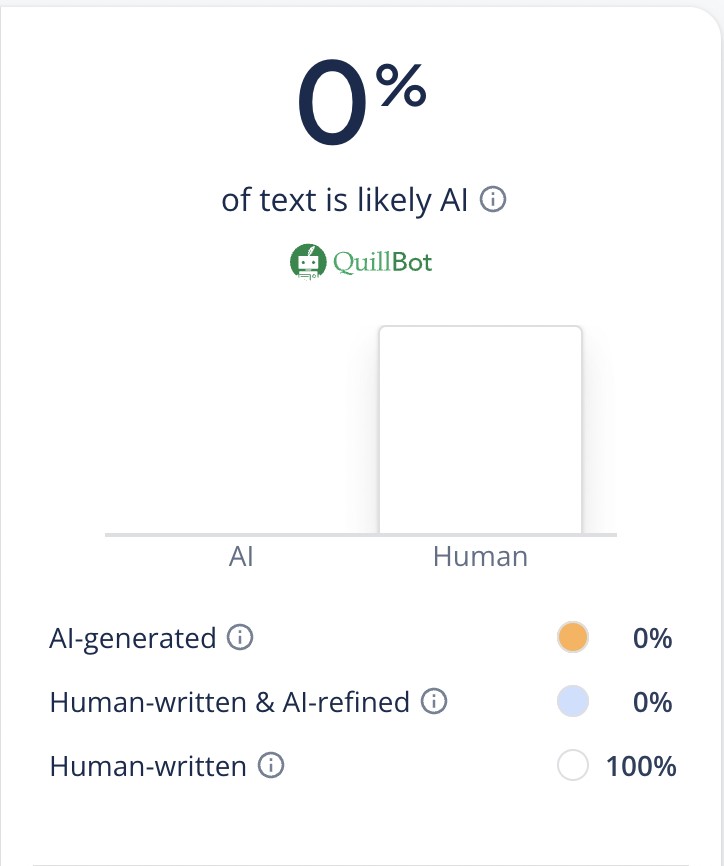

So far, so good. But what would the checker see? Remember, this is not only AI inspired, but there are a few sentences that are Gemini originals.

That’s right, kids. The checker said 0.

My takeaway, is that AI is great in this instance, for guiding you and influencing what to write, but if it is the writing itself, then not only are you passing off something as your work, which isn’t. You are also, not fooling a saavy professor…and you aren’t learning in the process.